Microarray Analysis Techniques on:

[Wikipedia]

[Google]

[Amazon]

Microarray analysis techniques are used in interpreting the data generated from experiments on DNA (Gene chip analysis), RNA, and protein microarrays, which allow researchers to investigate the expression state of a large number of genes - in many cases, an organism's entire

Most microarray manufacturers, such as

Most microarray manufacturers, such as

Specialized software tools for statistical analysis to determine the extent of over- or under-expression of a gene in a microarray experiment relative to a reference state have also been developed to aid in identifying genes or gene sets associated with particular

Specialized software tools for statistical analysis to determine the extent of over- or under-expression of a gene in a microarray experiment relative to a reference state have also been developed to aid in identifying genes or gene sets associated with particular

/ref> This analysis uses

ArrayExplorer - Compare microarray side by side to find the one that best suits your research needs

—software

StatsArray - Online Microarray Analysis Services

—software

ArrayMining.net - web-application for online analysis of microarray data

—software

FunRich - Perform gene set enrichment analysis

—software

Comparative Transcriptomics Analysis

i

Reference Module in Life Sciences

GeneChip® Expression Analysis-Data Analysis Fundamentals

(by Affymetrix)

Duke data_analysis_fundamentals_manual

Microarrays Bioinformatics algorithms

genome

In the fields of molecular biology and genetics, a genome is all the genetic information of an organism. It consists of nucleotide sequences of DNA (or RNA in RNA viruses). The nuclear genome includes protein-coding genes and non-coding ge ...

- in a single experiment. Such experiments can generate very large amounts of data, allowing researchers to assess the overall state of a cell or organism. Data in such large quantities is difficult - if not impossible - to analyze without the help of computer programs.

Introduction

Microarray data analysis is the final step in reading and processing data produced by a microarray chip. Samples undergo various processes including purification and scanning using the microchip, which then produces a large amount of data that requires processing via computer software. It involves several distinct steps, as outlined in the image below. Changing any one of the steps will change the outcome of the analysis, so the MAQC Project was created to identify a set of standard strategies. Companies exist that use the MAQC protocols to perform a complete analysis.Techniques

Most microarray manufacturers, such as

Most microarray manufacturers, such as Affymetrix

Affymetrix is now Applied Biosystems, a brand of DNA microarray products sold by Thermo Fisher Scientific that originated with an American biotechnology research and development and manufacturing company of the same name. The Santa Clara, Califor ...

and Agilent

Agilent Technologies, Inc. is an American life sciences company that provides instruments, software, services, and consumables for the entire laboratory workflow. Its global headquarters is located in Santa Clara, California. Agilent was establi ...

, provide commercial data analysis software alongside their microarray products. There are also open source options that utilize a variety of methods for analyzing microarray data.

Aggregation and normalization

Comparing two different arrays or two different samples hybridized to the same array generally involves making adjustments for systematic errors introduced by differences in procedures and dye intensity effects. Dye normalization for two color arrays is often achieved bylocal regression

Local regression or local polynomial regression, also known as moving regression, is a generalization of the moving average and polynomial regression.

Its most common methods, initially developed for scatterplot smoothing, are LOESS (locally es ...

. LIMMA provides a set of tools for background correction and scaling, as well as an option to average on-slide duplicate spots. A common method for evaluating how well normalized an array is, is to plot an MA plot Within computational biology, an MA plot is an application of a Bland–Altman plot for visual representation of genomic data. The plot visualizes the differences between measurements taken in two samples, by transforming the data onto M (log ratio) ...

of the data. MA plots can be produced using programs and languages such as R, MATLAB, and Excel.

Raw Affy data contains about twenty probes for the same RNA target. Half of these are "mismatch spots", which do not precisely match the target sequence. These can theoretically measure the amount of nonspecific binding for a given target. Robust Multi-array Average (RMA) is a normalization approach that does not take advantage of these mismatch spots, but still must summarize the perfect matches through median polish

The median polish is a simple and robust exploratory data analysis procedure proposed by the statistician John Tukey. The purpose of median polish is to find an additively-fit model for data in a two-way layout table (usually, results from a factor ...

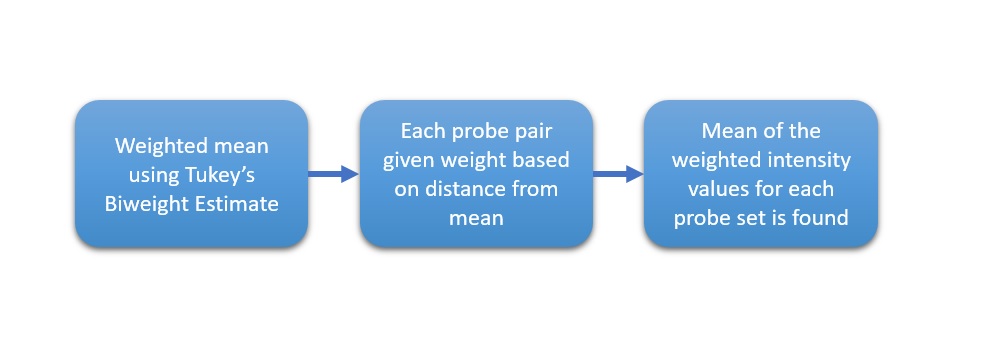

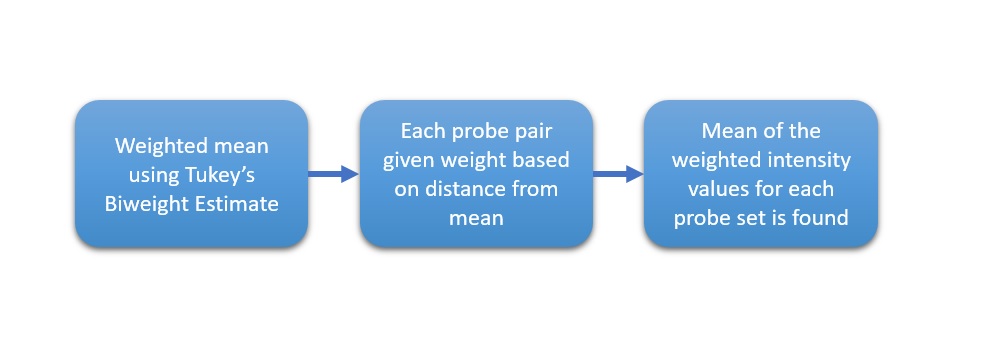

. The median polish algorithm, although robust, behaves differently depending on the number of samples analyzed. Quantile normalization, also part of RMA, is one sensible approach to normalize a batch of arrays in order to make further comparisons meaningful.

The current Affymetrix MAS5 algorithm, which uses both perfect match and mismatch probes, continues to enjoy popularity and do well in head to head tests.

Factor analysis

Factor analysis is a statistical method used to describe variability among observed, correlated variables in terms of a potentially lower number of unobserved variables called factors. For example, it is possible that variations in six observed ...

for Robust Microarray Summarization (FARMS) is a model-based technique for summarizing array data at perfect match probe level. It is based on a factor analysis model for which a Bayesian maximum a posteriori method optimizes the model parameters under the assumption of Gaussian measurement noise. According to the Affycomp benchmark FARMS outperformed all other summarizations methods with respect to sensitivity and specificity.

Identification of significant differential expression

Many strategies exist to identify array probes that show an unusual level of over-expression or under-expression. The simplest one is to call "significant" any probe that differs by an average of at least twofold between treatment groups. More sophisticated approaches are often related tot-test

A ''t''-test is any statistical hypothesis testing, statistical hypothesis test in which the test statistic follows a Student's t-distribution, Student's ''t''-distribution under the null hypothesis. It is most commonly applied when the test stati ...

s or other mechanisms that take both effect size and variability into account. Curiously, the p-values associated with particular genes do not reproduce well between replicate experiments, and lists generated by straight fold change perform much better. This represents an extremely important observation, since the point of performing experiments has to do with predicting general behavior. The MAQC group recommends using a fold change assessment plus a non-stringent p-value cutoff, further pointing out that changes in the background correction and scaling process have only a minimal impact on the rank order of fold change differences, but a substantial impact on p-values.

Clustering

Clustering is a data mining technique used to group genes having similar expression patterns.Hierarchical clustering

In data mining and statistics, hierarchical clustering (also called hierarchical cluster analysis or HCA) is a method of cluster analysis that seeks to build a hierarchy of clusters. Strategies for hierarchical clustering generally fall into ...

, and k-means clustering are widely used techniques in microarray analysis.

Hierarchical clustering

Hierarchical clustering is a statistical method for finding relativelyhomogeneous

Homogeneity and heterogeneity are concepts often used in the sciences and statistics relating to the uniformity of a substance or organism. A material or image that is homogeneous is uniform in composition or character (i.e. color, shape, siz ...

clusters. Hierarchical clustering consists of two separate phases. Initially, a distance matrix

In mathematics, computer science and especially graph theory, a distance matrix is a square matrix (two-dimensional array) containing the distances, taken pairwise, between the elements of a set. Depending upon the application involved, the ''dist ...

containing all the pairwise distances between the genes is calculated. Pearson's correlation and Spearman's correlation are often used as dissimilarity estimates, but other methods, like Manhattan distance

A taxicab geometry or a Manhattan geometry is a geometry whose usual distance function or Metric (mathematics), metric of Euclidean geometry is replaced by a new metric in which the distance between two points is the sum of the absolute differences ...

or Euclidean distance

In mathematics, the Euclidean distance between two points in Euclidean space is the length of a line segment between the two points.

It can be calculated from the Cartesian coordinates of the points using the Pythagorean theorem, therefor ...

, can also be applied. Given the number of distance measures available and their influence in the clustering algorithm results, several studies have compared and evaluated different distance measures for the clustering of microarray data, considering their intrinsic properties and robustness to noise. After calculation of the initial distance matrix, the hierarchical clustering algorithm either (A) joins iteratively the two closest clusters starting from single data points (agglomerative, bottom-up approach, which is fairly more commonly used), or (B) partitions clusters iteratively starting from the complete set (divisive, top-down approach). After each step, a new distance matrix between the newly formed clusters and the other clusters is recalculated. Hierarchical cluster analysis methods include:

*Single linkage (minimum method, nearest neighbor)

*Average linkage (UPGMA

UPGMA (unweighted pair group method with arithmetic mean) is a simple agglomerative (bottom-up) hierarchical clustering method. The method is generally attributed to Sokal and Michener.

The UPGMA method is similar to its ''weighted'' variant, the ...

).

*Complete linkage (maximum method, furthest neighbor)

Different studies have already shown empirically that the Single linkage clustering algorithm produces poor results when employed to gene expression microarray data and thus should be avoided.

K-means clustering

K-means clustering is an algorithm for grouping genes or samples based on pattern into ''K'' groups. Grouping is done by minimizing the sum of the squares of distances between the data and the corresponding clustercentroid

In mathematics and physics, the centroid, also known as geometric center or center of figure, of a plane figure or solid figure is the arithmetic mean position of all the points in the surface of the figure. The same definition extends to any ob ...

. Thus the purpose of K-means clustering is to classify data based on similar expression. K-means clustering algorithm and some of its variants (including k-medoids

The -medoids problem is a clustering problem similar to -means. The name was coined by Leonard Kaufman and Peter J. Rousseeuw with their PAM algorithm. Both the -means and -medoids algorithms are partitional (breaking the dataset up into group ...

) have been shown to produce good results for gene expression data (at least better than hierarchical clustering methods). Empirical comparisons of k-means

''k''-means clustering is a method of vector quantization, originally from signal processing, that aims to partition ''n'' observations into ''k'' clusters in which each observation belongs to the cluster with the nearest mean (cluster centers o ...

, k-medoids

The -medoids problem is a clustering problem similar to -means. The name was coined by Leonard Kaufman and Peter J. Rousseeuw with their PAM algorithm. Both the -means and -medoids algorithms are partitional (breaking the dataset up into group ...

, hierarchical methods and, different distance measures can be found in the literature.

Pattern recognition

Commercial systems for gene network analysis such as Ingenuity and Pathway studio create visual representations of differentially expressed genes based on current scientific literature. Non-commercial tools such as FunRich,GenMAPP

GenMAPP (Gene Map Annotator and Pathway Profiler) is a free, open-source bioinformatics software tool designed to visualize and analyze genomic data in the context of pathways (metabolic, signaling), connecting gene-level datasets to biological p ...

and Moksiskaan also aid in organizing and visualizing gene network data procured from one or several microarray experiments. A wide variety of microarray analysis tools are available through Bioconductor

Bioconductor is a Free software, free, Open-source software, open source and Open source software development, open development software project for the analysis and comprehension of Genome, genomic data generated by Wet laboratory, wet lab experi ...

written in the R programming language

R is a programming language for statistical computing and graphics supported by the R Core Team and the R Foundation for Statistical Computing. Created by statisticians Ross Ihaka and Robert Gentleman, R is used among data miners, bioinform ...

. The frequently cited SAM module and other microarray tools are available through Stanford University. Another set is available from Harvard and MIT.

Specialized software tools for statistical analysis to determine the extent of over- or under-expression of a gene in a microarray experiment relative to a reference state have also been developed to aid in identifying genes or gene sets associated with particular

Specialized software tools for statistical analysis to determine the extent of over- or under-expression of a gene in a microarray experiment relative to a reference state have also been developed to aid in identifying genes or gene sets associated with particular phenotype

In genetics, the phenotype () is the set of observable characteristics or traits of an organism. The term covers the organism's morphology or physical form and structure, its developmental processes, its biochemical and physiological proper ...

s. One such method of analysis, known as Gene Set Enrichment

Gene set enrichment analysis (GSEA) (also called functional enrichment analysis or pathway enrichment analysis) is a method to identify classes of genes or proteins that are over-represented in a large set of genes or proteins, and may have an a ...

Analysis (GSEA), uses a Kolmogorov-Smirnov-style statistic to identify groups of genes that are regulated together. This third-party statistics package offers the user information on the genes or gene sets of interest, including links to entries in databases such as NCBI's GenBank

The GenBank sequence database is an open access, annotated collection of all publicly available nucleotide sequences and their protein translations. It is produced and maintained by the National Center for Biotechnology Information (NCBI; a part ...

and curated databases such as Biocarta and Gene Ontology

The Gene Ontology (GO) is a major bioinformatics initiative to unify the representation of gene and gene product attributes across all species. More specifically, the project aims to: 1) maintain and develop its controlled vocabulary of gene and g ...

. Protein complex enrichment analysis tool (COMPLEAT) provides similar enrichment analysis at the level of protein complexes. The tool can identify the dynamic protein complex regulation under different condition or time points. Related system, PAINT and SCOPE performs a statistical analysis on gene promoter regions, identifying over and under representation of previously identified transcription factor

In molecular biology, a transcription factor (TF) (or sequence-specific DNA-binding factor) is a protein that controls the rate of transcription of genetic information from DNA to messenger RNA, by binding to a specific DNA sequence. The fu ...

response elements. Another statistical analysis tool is Rank Sum Statistics for Gene Set Collections (RssGsc), which uses rank sum probability distribution functions to find gene sets that explain experimental data. A further approach is contextual meta-analysis, i.e. finding out how a gene cluster responds to a variety of experimental contexts. Genevestigator

Genevestigator is an application consisting of a gene expression database and tools to analyse the data. It exists in two versions, biomedical and plant, depending on the species of the underlying microarray and RNAseq data. It was started in Janu ...

is a public tool to perform contextual meta-analysis across contexts such as anatomical parts, stages of development, and response to diseases, chemicals, stresses, and neoplasms

A neoplasm () is a type of abnormal and excessive growth of tissue. The process that occurs to form or produce a neoplasm is called neoplasia. The growth of a neoplasm is uncoordinated with that of the normal surrounding tissue, and persists ...

.

Significance analysis of microarrays (SAM)

Significance analysis of microarrays (SAM) is a statistical technique, established in 2001 by Virginia Tusher,Robert Tibshirani

Robert Tibshirani (born July 10, 1956) is a professor in the Departments of Statistics and Biomedical Data Science at Stanford University. He was a professor at the University of Toronto from 1985 to 1998. In his work, he develops statistical to ...

and Gilbert Chu

Gilbert Chu () is an American biochemist. He is a Professor of Medicine (Oncology) and Biochemistry at the Stanford Medical School.

Biography

Chu graduated from Garden City High School in New York in 1963. He received a B.A. in Physics from Pri ...

, for determining whether changes in gene expression

Gene expression is the process by which information from a gene is used in the synthesis of a functional gene product that enables it to produce end products, protein or non-coding RNA, and ultimately affect a phenotype, as the final effect. The ...

are statistically significant. With the advent of DNA microarray

A DNA microarray (also commonly known as DNA chip or biochip) is a collection of microscopic DNA spots attached to a solid surface. Scientists use DNA microarrays to measure the expression levels of large numbers of genes simultaneously or to ...

s, it is now possible to measure the expression of thousands of genes in a single hybridization experiment. The data generated is considerable, and a method for sorting out what is significant and what isn't is essential. SAM is distributed by Stanford University

Stanford University, officially Leland Stanford Junior University, is a private research university in Stanford, California. The campus occupies , among the largest in the United States, and enrolls over 17,000 students. Stanford is consider ...

in an R-package.

SAM identifies statistically significant genes by carrying out gene specific t-tests and computes a statistic ''dj'' for each gene ''j'', which measures the strength of the relationship between gene expression and a response variable.Chu, G., Narasimhan, B, Tibshirani, R, Tusher, V. "SAM "Significance Analysis of Microarrays" Users Guide and technical document." /ref>

non-parametric statistics

Nonparametric statistics is the branch of statistics that is not based solely on parametrized families of probability distributions (common examples of parameters are the mean and variance). Nonparametric statistics is based on either being distr ...

, since the data may not follow a normal distribution

In statistics, a normal distribution or Gaussian distribution is a type of continuous probability distribution for a real-valued random variable. The general form of its probability density function is

:

f(x) = \frac e^

The parameter \mu ...

. The response variable describes and groups the data based on experimental conditions. In this method, repeated permutations

In mathematics, a permutation of a set is, loosely speaking, an arrangement of its members into a sequence or linear order, or if the set is already ordered, a rearrangement of its elements. The word "permutation" also refers to the act or pr ...

of the data are used to determine if the expression of any gene is significant related to the response. The use of permutation-based analysis accounts for correlations in genes and avoids parametric assumptions about the distribution of individual genes. This is an advantage over other techniques (e.g., ANOVA

Analysis of variance (ANOVA) is a collection of statistical models and their associated estimation procedures (such as the "variation" among and between groups) used to analyze the differences among means. ANOVA was developed by the statistician ...

and Bonferroni

Carlo Emilio Bonferroni (28 January 1892 – 18 August 1960) was an Italian mathematician who worked on probability theory.

Biography

Bonferroni studied piano and conducting in Turin Conservatory and at University of Turin under Giuseppe Peano ...

), which assume equal variance and/or independence of genes.

Basic protocol

*Perform microarray experiments — DNA microarray with oligo and cDNA primers, SNP arrays, protein arrays, etc. *Input Expression Analysis in Microsoft Excel — see below *Run SAM as a Microsoft Excel Add-Ins *Adjust the Delta tuning parameter to get a significant # of genes along with an acceptable false discovery rate (FDR)) and Assess Sample Size by calculating the mean difference in expression in the SAM Plot Controller *List Differentially Expressed Genes (Positively and Negatively Expressed Genes)Running SAM

*SAM is available for download online at http://www-stat.stanford.edu/~tibs/SAM/ for academic and non-academic users after completion of a registration step. *SAM is run as an Excel Add-In, and the SAM Plot Controller allows Customization of the False Discovery Rate and Delta, while the SAM Plot and SAM Output functionality generate a List of Significant Genes, Delta Table, and Assessment of Sample Sizes *Permutations

In mathematics, a permutation of a set is, loosely speaking, an arrangement of its members into a sequence or linear order, or if the set is already ordered, a rearrangement of its elements. The word "permutation" also refers to the act or pr ...

are calculated based on the number of samples

*Block Permutations

**Blocks are batches of microarrays; for example for eight samples split into two groups (control and affected) there are 4!=24 permutations for each block and the total number of permutations is (24)(24)= 576. A minimum of 1000 permutations are recommended;

the number of permutations is set by the user when imputing correct values for the data set to run SAM

Response formats

Types: *Quantitative — real-valued (such as heart rate) *One class — tests whether the mean gene expression differs from zero *Two class — two sets of measurements **Unpaired — measurement units are different in the two groups; e.g. control and treatment groups with samples from different patients **Paired — same experimental units are measured in the two groups; e.g. samples before and after treatment from the same patients *Multiclass — more than two groups with each containing different experimental units; generalization of two class unpaired type *Survival — data of a time until an event (for example death or relapse) *Time course — each experimental units is measured at more than one time point; experimental units fall into a one or two class design *Pattern discovery — no explicit response parameter is specified; the user specifies eigengene (principal component) of the expression data and treats it as a quantitative responseAlgorithm

SAM calculates a test statistic for relative difference in gene expression based on permutation analysis of expression data and calculates a false discovery rate. The principal calculations of the program are illustrated below. The ''s''o constant is chosen to minimize the coefficient of variation of ''di''. r''i'' is equal to the expression levels (x) for gene ''i'' under y experimental conditions. Fold changes (t) are specified to guarantee genes called significant change at least a pre-specified amount. This means that the absolute value of the average expression levels of a gene under each of two conditions must be greater than the fold change (t) to be called positive and less than the inverse of the fold change (t) to be called negative. The SAM algorithm can be stated as: #Order test statistics according to magnitude #For each permutation compute the ordered null (unaffected) scores #Plot the ordered test statistic against the expected null scores #Call each gene significant if the absolute value of the test statistic for that gene minus the mean test statistic for that gene is greater than a stated threshold #Estimate the false discovery rate based on expected versus observed valuesOutput

* Significant gene sets ** Positive gene set — higher expression of most genes in the gene set correlates with higher values of the phenotype ** Negative gene set — lower expression of most genes in the gene set correlates with higher values of the phenotypeSAM features

*Data from Oligo or cDNA arrays, SNP array, protein arrays, etc. can be utilized in SAM *Correlates expression data to clinical parameters *Correlates expression data with time *Uses data permutation to estimates False Discovery Rate for multiple testing *Reports local false discovery rate (the FDR for genes having a similar di as that gene) and miss rates *Can work with blocked design for when treatments are applied within different batches of arrays *Can adjust threshold determining number of gene called significantError correction and quality control

Quality control

Entire arrays may have obvious flaws detectable by visual inspection, pairwise comparisons to arrays in the same experimental group, or by analysis of RNA degradation. Results may improve by removing these arrays from the analysis entirely.Background correction

Depending on the type of array, signal related to nonspecific binding of the fluorophore can be subtracted to achieve better results. One approach involves subtracting the average signal intensity of the area between spots. A variety of tools for background correction and further analysis are available from TIGR, Agilent ( GeneSpring), and Ocimum Bio Solutions (Genowiz).Spot filtering

Visual identification of local artifacts, such as printing or washing defects, may likewise suggest the removal of individual spots. This can take a substantial amount of time depending on the quality of array manufacture. In addition, some procedures call for the elimination of all spots with an expression value below a certain intensity threshold.See also

*Microarray databases A microarray database is a repository containing microarray gene expression data. The key uses of a microarray database are to store the measurement data, manage a searchable index, and make the data available to other applications for analysis and ...

* Significance analysis of microarrays

* Transcriptomics

Transcriptomics technologies are the techniques used to study an organism's transcriptome, the sum of all of its RNA transcripts. The information content of an organism is recorded in the DNA of its genome and expressed through transcription. He ...

* Proteomics

Proteomics is the large-scale study of proteins. Proteins are vital parts of living organisms, with many functions such as the formation of structural fibers of muscle tissue, enzymatic digestion of food, or synthesis and replication of DNA. In ...

References

{{reflist, refs= {{cite journal , last1 = Tusher , first1 = V. G. , last2 = Tibshirani , first2 = R. , display-authors =et al , year = 2001 , title = Significance analysis of microarrays applied to the ionizing radiation response , url = http://www-stat.stanford.edu/~tibs/SAM/pnassam.pdf , journal = Proceedings of the National Academy of Sciences , volume = 98 , issue = 9, pages = 5116–5121 , doi=10.1073/pnas.091062498, pmid = 11309499 , pmc = 33173 , bibcode = 2001PNAS...98.5116G , doi-access = free {{cite journal , last1 = Zang , first1 = S. , last2 = Guo , first2 = R. , display-authors = et al , year = 2007 , title = Integration of statistical inference methods and a novel control measure to improve sensitivity and specificity of data analysis in expression profiling studies , journal = Journal of Biomedical Informatics , volume = 40 , issue = 5, pages = 552–560 , doi=10.1016/j.jbi.2007.01.002, pmid = 17317331 , doi-access = freeExternal links

ArrayExplorer - Compare microarray side by side to find the one that best suits your research needs

—software

StatsArray - Online Microarray Analysis Services

—software

ArrayMining.net - web-application for online analysis of microarray data

—software

FunRich - Perform gene set enrichment analysis

—software

Comparative Transcriptomics Analysis

i

Reference Module in Life Sciences

GeneChip® Expression Analysis-Data Analysis Fundamentals

(by Affymetrix)

Duke data_analysis_fundamentals_manual

Microarrays Bioinformatics algorithms